Amazon.com: Hands-On GPU Computing with Python: Explore the capabilities of GPUs for solving high performance computational problems: 9781789341072: Bandyopadhyay, Avimanyu: Books

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

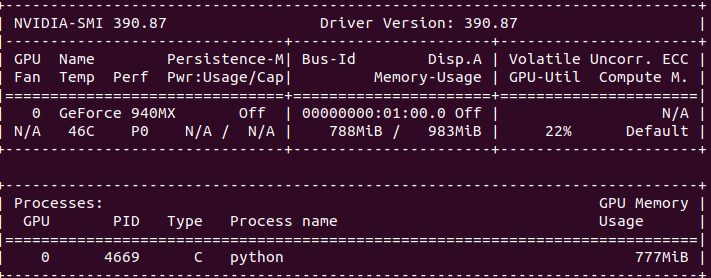

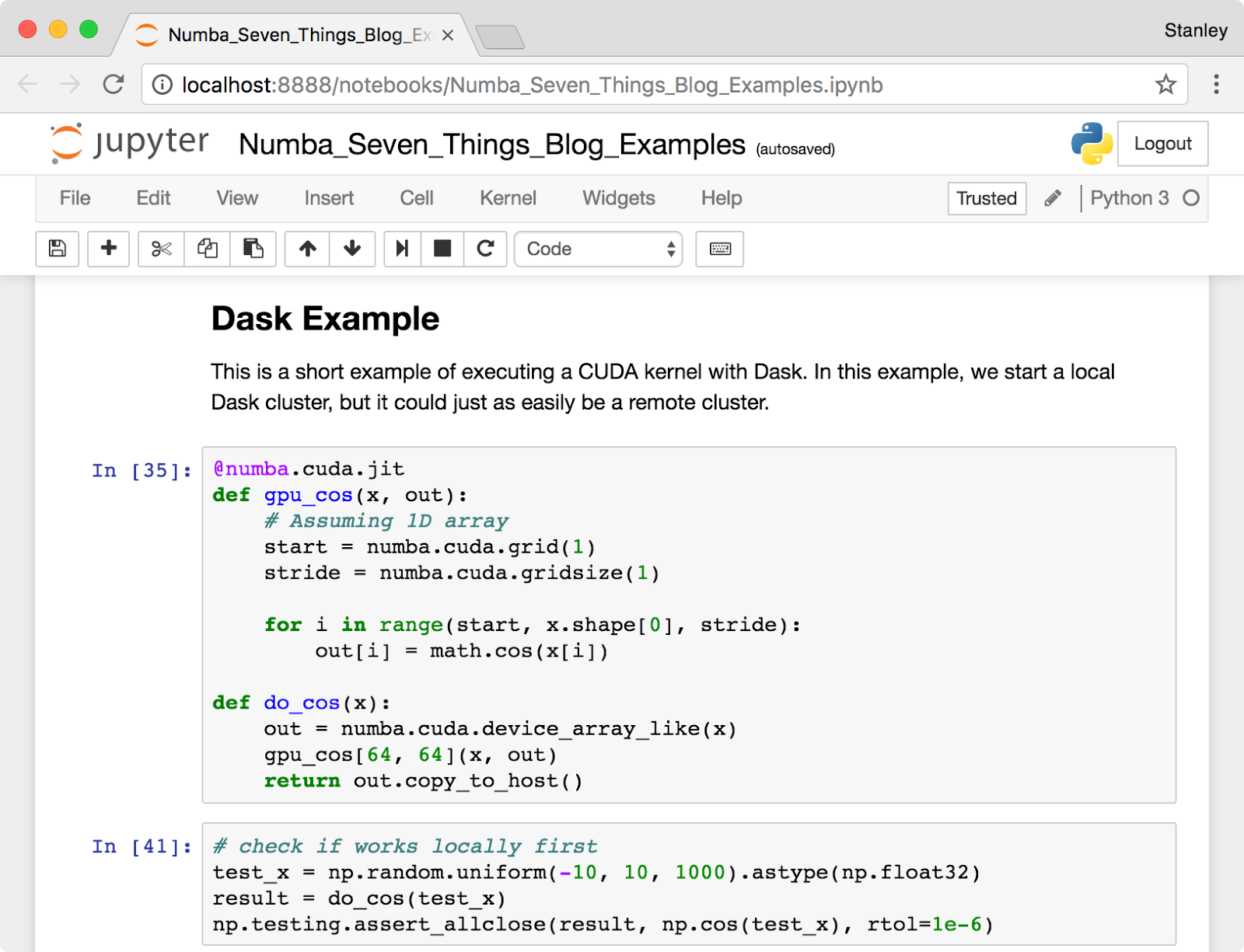

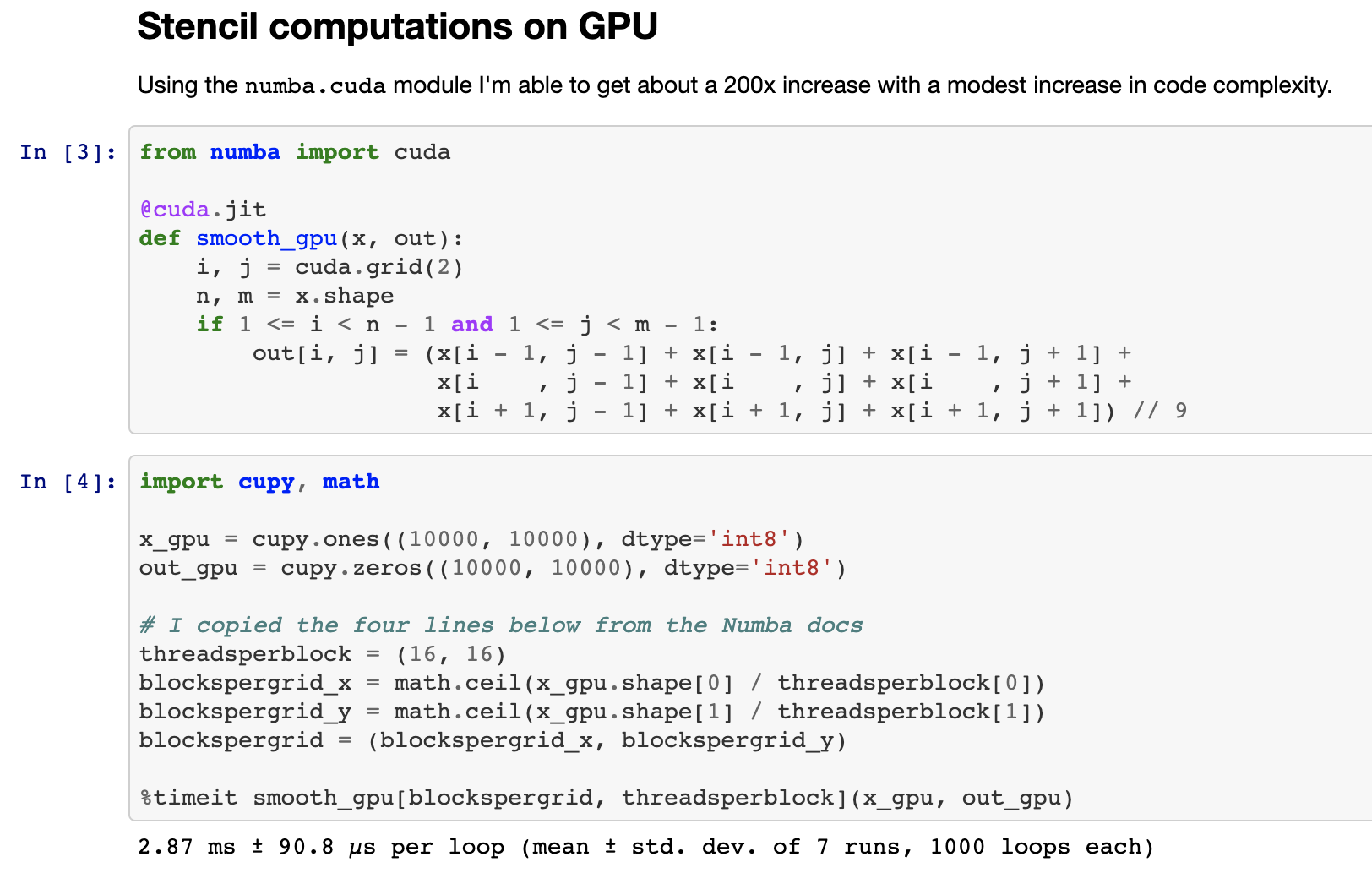

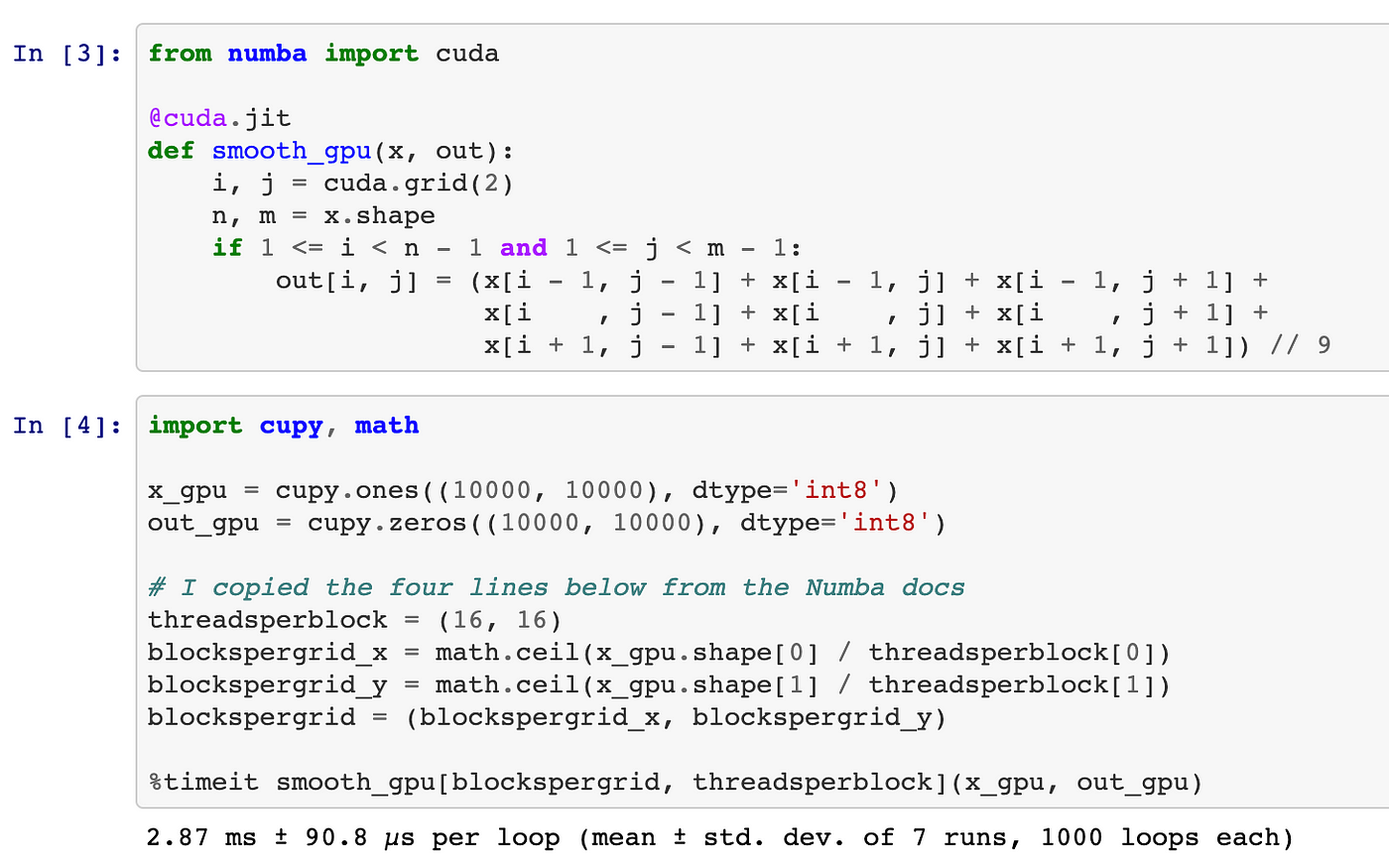

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Hands-On GPU Programming with Python and CUDA: Explore high-performance parallel computing with CUDA 1, Tuomanen, Dr. Brian, eBook - Amazon.com

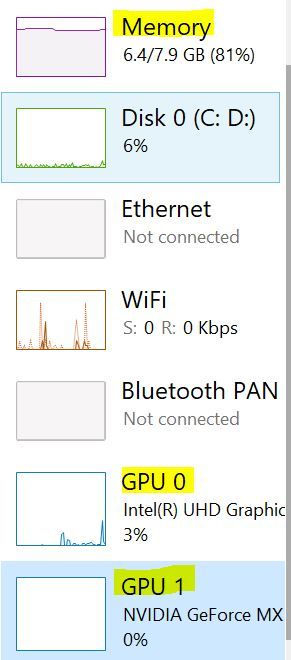

Using the Python Keras multi_gpu_model with LSTM / GRU to predict Timeseries data - Data Science Stack Exchange